The key difference between effective, sustainable Microsoft 365 Copilot adoption and short-term AI initiatives isn’t in the technology itself — it’s whether organizations can bridge the gap between boardroom strategy and employee behavior.

McKinsey reports that companies with leading digital and AI capabilities outperform slower adopters by two to six times on total shareholder returns. Yet most organizations can't effectively measure AI investment value or ensure secure usage.

The answer: learning and monitoring Microsoft 365 Copilot prompts.

Tracking and analyzing Microsoft 365 Copilot prompt use patterns provide concrete metrics to measure AI value and productivity gains. This practice also serves as a critical security layer for insider threat detection and data governance.

The Cost of AI Measurement Gaps

Current workplace realities highlight this urgency. Deloitte finds six out of 10 employees view AI as a colleague, yet McKinsey shows executives dramatically underestimate workforce AI adoption — estimating only 4% of employees use Generative AI (GenAI) for 30% of their work, when it's actually three times higher. This leadership-employee disconnect creates measurement gaps and security blind spots that prompt monitoring addresses.

Organizations without systematic prompt tracking miss out on productivity insights while remaining vulnerable to insider threats and compliance violations. Those leveraging prompt analytics gain dual advantages: clear visibility into AI-driven business value and enhanced security posture in an increasingly complex threat landscape.

When business leaders fail to define and measure the value of AI, they risk more than just inefficiency — they also risk disengaging the very workforce that AI is meant to empower. Without visibility into how AI tools are used and the outcomes they drive, organizations miss critical opportunities to recognize employee innovation, optimize workflows, and reinforce trust in digital investments.

This information gap can lead to demotivation and underutilization of AI capabilities, ultimately limiting ROI on technologies that never reach their maximum potential.

Beyond AI Enablement: The Security Imperative

Learning and tracking AI prompts can pave the way for organizations to boost two critical areas of operations:

Identifying Key Business Needs and Challenges

Tracking frequent Copilot prompts provides insights into organizational needs and challenges. This information supports critical areas:

- Targeted solutions. Understanding common queries submitted to Microsoft 365 Copilot gives leaders opportunities to develop targeted solutions and strategies to address workforce challenges.

- Resource allocation. Frequently used prompts underscore areas where resources are lacking or where management can provide additional support. This enables leaders to allocate resources more efficiently and ensures that critical areas are given the necessary attention.

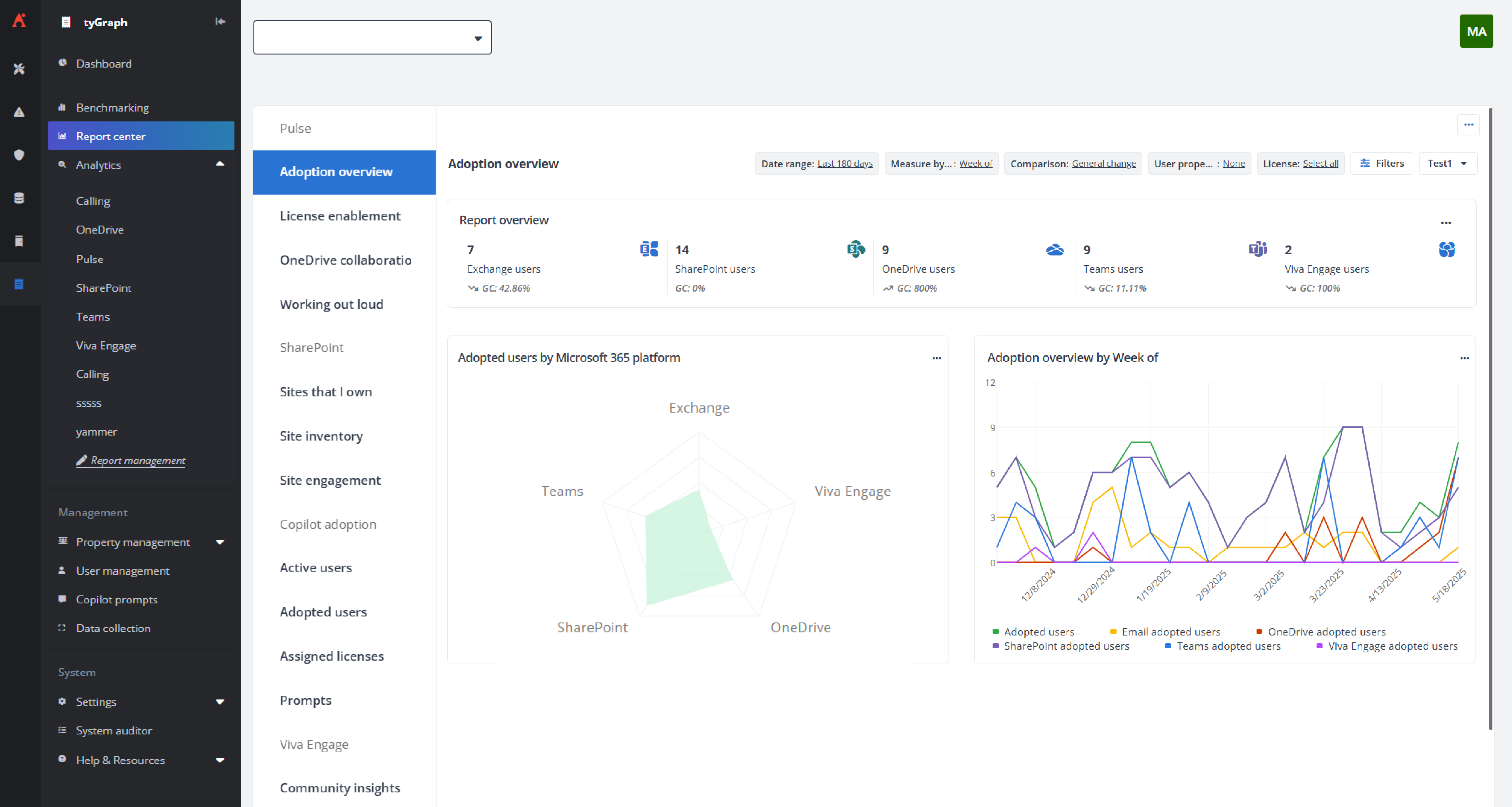

By monitoring the common prompts used, as well as the frequency and nature of Copilot use cases, leaders can also assess how AI empowers specific teams or departments to achieve their business goals:

- Performance metrics. Frequent Copilot usage can indicate how well employees are leveraging AI and how effective these tools are for solving issues. High usage rates, for example, suggest that AI tools deliver valuable assistance and are integral to operations.

- Optimizing processes. Patterns in Copilot prompts give leaders insights into either inefficient or problematic processes. These insights can jumpstart process improvements, creating opportunities for increased productivity and enhanced operations.

Beyond boosting operational efficiency and enabling growth, learning and monitoring Copilot prompts can provide valuable support for one critical area that organizations cannot afford to neglect amid an endlessly evolving threat landscape: their security posture.

Prompt reporting serves another purpose beyond detecting security threats. While IT teams support employees learning AI tools, information security (infosec) teams and security operation centers (SOCs) must ensure employees aren't using AI inappropriately.

Building on Established Security Practices

IT teams often support employees who are new to AI tools or are encountering roadblocks. Beyond basic assistance, IT also monitors and prevents unethical AI usage. This includes entering inappropriate prompts to access information that is unnecessary to their role or accessing data for ill gain, like querying client account balances beyond their job scope or probing for executive’s salaries.

Securing appropriate AI use builds on existing practices. Security professionals already track how users leverage existing technologies:

- Microsoft Teams: Identifying defunct teams requiring decommissioning

- SharePoint sites: Tracking high-traffic sites and understanding usage patterns

- Critical for SharePoint: Identifying sites with sensitive information requiring immediate compliance updates

However, the continuous evolution of AI technologies calls not just for agility among organizations but also constant vigilance against security threats.

AI Agents: A New Opportunity for Security

AI agents demand enhanced data governance and centralized prompt visibility — including monitoring for insider and external threats.

Even with user permissions and agent restrictions in place, security vulnerabilities persist. For example, a compromised user who fails to secure their AI agent can inadvertently grant access to malicious actors – both internal and external – allowing them to use prompts that compromise sensitive information.

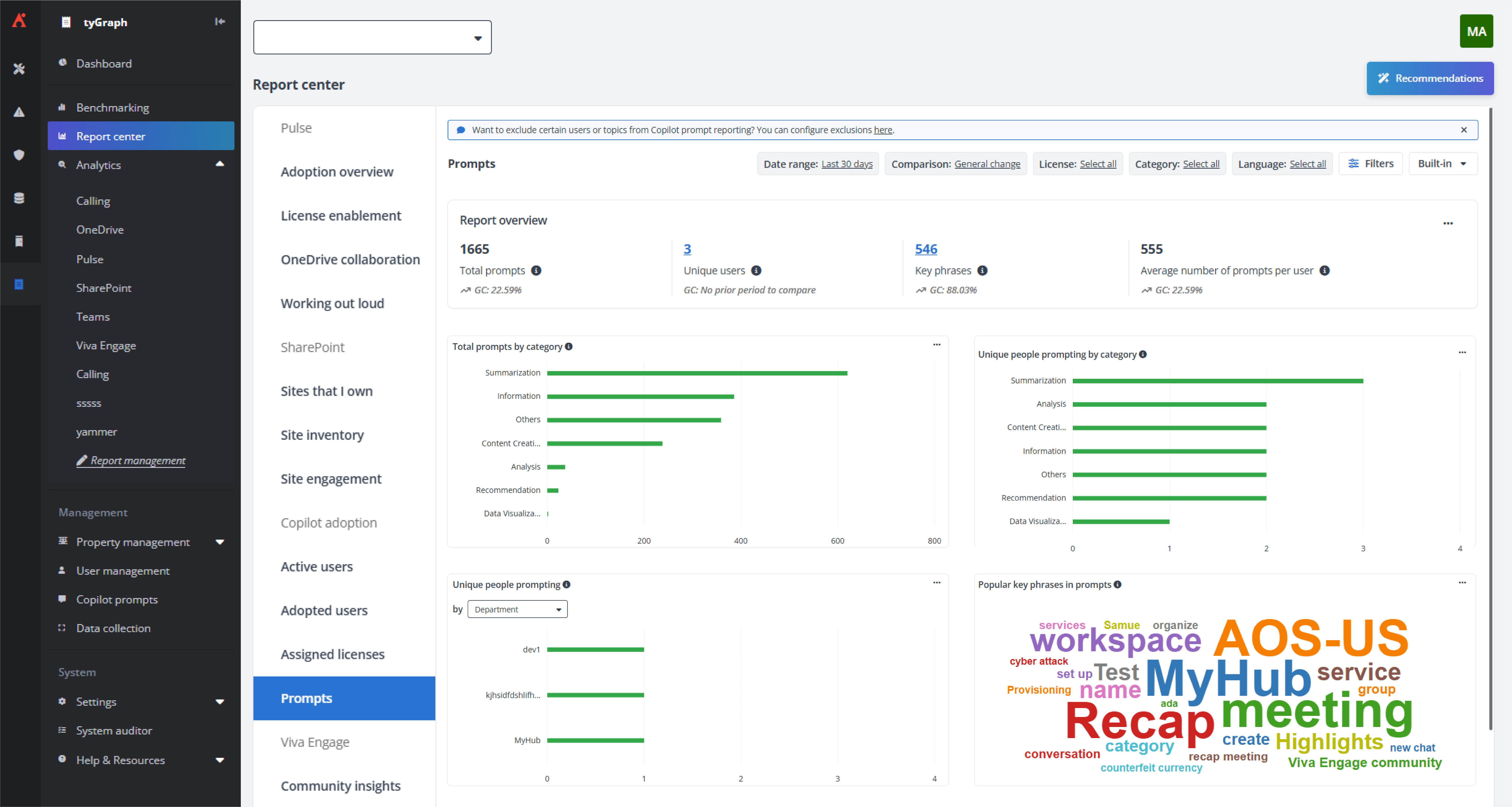

Key Insights Through Prompt Analytics

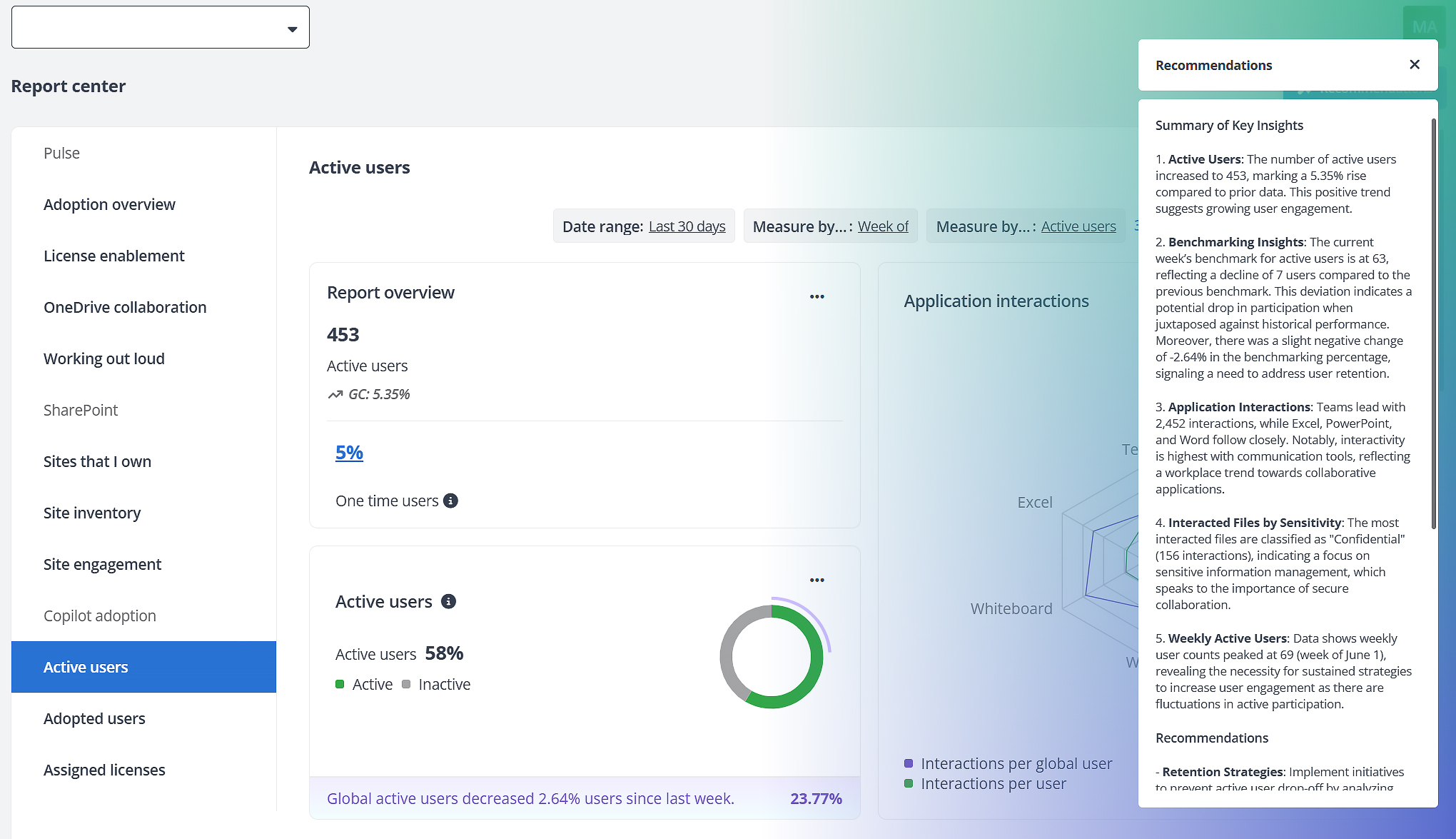

In an age of increasingly sophisticated cyberattacks, efficient incident response requires consolidating information in a centralized risk posture command center that aggregates users, usage patterns, apps, sites, and documents. However, business teams need these complex datasets translated into clear, actionable insights without requiring specialized expertise.

From Patterns to Priorities: Turning Prompt Data Into Actionable Intelligence

Prompt reporting enables organizations to immediately identify suspicious activity patterns and receive alerts for security risks, including:

- Compromised user accounts.

- Social engineering attempts.

- Ransomware indicators.

Centralized prompt and usage data access becomes powerful when organizations automatically generate human-readable insights from complex datasets. Advanced reporting systems leverage AI to translate prompt patterns and productivity metrics into clear, prioritized action items.

This intelligence layer informs organizations on how to respond to both operational challenges and productivity opportunities. Instead of spending valuable time deciphering what prompt frequency spikes or usage patterns actually mean, business and IT leaders receive straightforward summaries that can pinpoint teams with exceptional AI adoption rates or identify departments where employees are struggling with prompt effectiveness.

Insights from this Copilot prompts report will empower leaders to implement strategies encouraging users to adopt and use Copilot regularly to meet and even exceed global benchmarks — all while staying aligned with internal security policies and regulatory standards. In addition, identifying areas where there is consistent underperformance against these benchmarks enables organizations to conduct feedback sessions to identify key adoption barriers.

Prompt Intelligence for Security Teams: Answering the Right Questions Fast

In case of security incidents, a risk posture command center helps IT teams identify threat sources. Meanwhile, AI-powered analysis automatically surfaces the most critical findings in plain language, eliminating the need for manual data interpretation. Rather than requiring security analysts to become experts in reading complex visualizations, intelligent reporting systems immediately provide clear answers to essential inquiries:

- Did a user access information inappropriate to their role and scope of responsibilities?

- Did a user deviate from their usual pattern of behavior and use prompts in an unethical way?

- Which user or users are behind AI agents that are carrying out or attempting to carry out risky prompts?

Armed with the answer to these inquiries, security teams can expedite incident response and learn timely insights into critical security gaps.

From Insight to Action: A Smarter Path Forward

By combining comprehensive prompt tracking with AI-powered interpretation, organizations gain more than just visibility — they gain clarity. This intelligent approach empowers teams to act swiftly on security threats and productivity opportunities alike, transforming complex data into actionable insights without requiring data expertise. The result: confident, timely, data-backed, and secure decision-making.